We present promising results for solving core problems in natural language parsing, while also performing at state-of-the-art accuracy on general parsing tasks. We exceed the accuracy of state-of-the-art parsers on languages with limited training resources by a considerable margin. We implement a parser based on this architecture to utilize transfer learning techniques to address important issues related with limited-resourced language. Using advanced deep learning techniques, our parser architecture tackles common issues with parsing such as long-distance head attachment, while using ‘architecture engineering’ to adapt to each target language in order to reduce the feature engineering often required for parsing tasks. In this paper, we present a multi-lingual dependency parser. Parse trees are used as part of many language processing applications. Syntactic parsing is the task of constructing a syntactic parse tree over a sentence which describes the structure of the sentence. Before effectively approaching many of these problems, it is necessary to process the syntactic structures of the sentences.

Natural language processing problems (such as speech recognition, text-based data mining, and text or speech generation) are becoming increasingly important. This test set is evaluated with the best performing parsing models and with gold POS tags, f-scores are above 90 and on textual input, the average f-score is 87.6. To analyze the order coverage of the treebank and learning capability of different parsers, a test set has been prepared conditioning different word orders. A treebank should cover most probable word orders of the language so that models can learn various orders accurately. To overcome the issue of data sparsity due to the morphological richness, lemmatization and unsupervised word clustering have been performed. The data-oriented parsing and recursive neural network model give an f-score of 87.1 by considering gold POS tags in the test set, on textual input, they show a performance with f-scores of 83.4 and 84.2, respectively.

These features enable dependency information for case markers and add phrasal and lexical context to the parse trees. Features include syntactic sub-categorization of POS tags, empirically learned horizontal and vertical markovizations and lexical head words. In this paper, probabilistic context-free grammars, data-oriented parsing, and recursive neural network based models have been experimented with several linguistic features which show improvements in the parsing results. input type, lemmatization, word clusters), part of speech tag set, phrase labels and the size of a training corpus are crucial for parsing such languages.

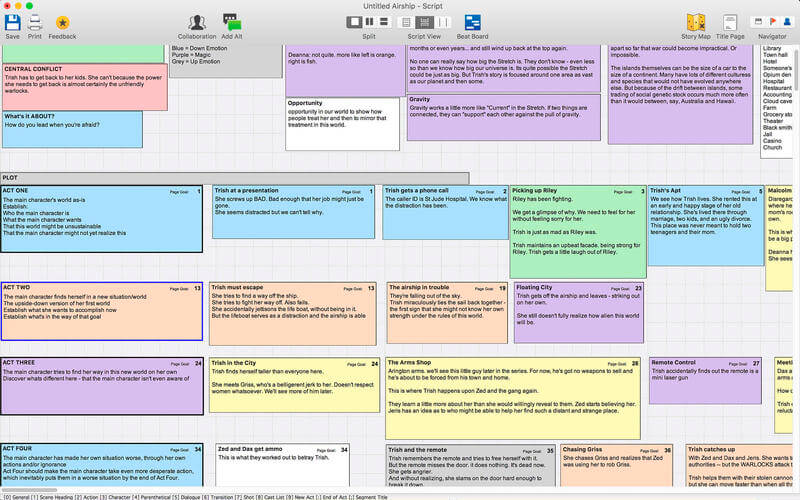

PREPPING SCRIPT FOR FINAL DRAFT TAGGER FREE

Urdu is a morphologically rich and is characterized by free word order. We demonstrate state of the art constituency parsing results for an Urdu treebank. This paper presents an analysis of experiments with statistical and neural parsing techniques for Urdu, a widely spoken South Asian language.

0 kommentar(er)

0 kommentar(er)